Introduction:

Regression analysis is one of the core tools of statistics that helps investigate relationships between variables. If there is only a single explanatory variable, it is termed “Simple Regression” – It is often difficult to come across such situations in real life. Computing a simple regression involves fitting a straight line through the scatter graph obtained from the sample. From coordinate geometry basics, recall that a straight line has the equation:

y=c + mx

where m = slope of the line, and c is the y intercept.

There can of course be many straight lines through a scatter plot.. Regression analysis picks ONE line in particular – The criteria used is to minimize the sum of squares of the errors (distance from the points in the scatter plot to the line constructed).

If there is more than one variable on which the prediction depends, it is called “Multiple Regression” – It accounts for multiple additional factors (separately) so that the effect of each (independent) variable on the dependent variable can be assessed.

The equation for a (linear) multiple regression can be expressed as:

y = a+bx1+cx2+…

Mathematically, it is identical to “Simple Regression”. For example, in solving a 2 parameter regression, we need 3 dimensions – so, instead of estimating a straight line, we select a single “plane” – again such that the sum of squares of errors is minimum.

We can in fact, extrapolate this to an arbitrarily large number of independent variables. Fortunately, Computers have no problems crunching the numbers using matrix algebra to solve numerous simultaneous equations to arrive at the results.

Using Matrix Algebra to Solve Multiple Regression:

Let X be the data matrix of the predictor (independent) variables.

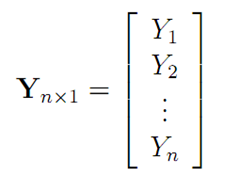

Let Y be the data vector representing the criterion (dependent) variable.

and, Let ‘b’ be the data vector representing the regression coefficients.

The formula for computing b (coefficient matrix) using Matrix algebra is given by the following formula:

b = (X’X)-1X’Y

The proof of this equation is quite simple:

1. The simplified equation for a (simple) linear regression in matrix terms is Y=Xb+e

2. Assume that the average error (e) will equal 0. The equation becomes Y=Xb and we need to find the value of ‘b’

3. multiply both sides of the equation by X’ (transpose of X) : X’Y = X’Xb

4. We are trying to get rid of X’X on the RHS.. so multiply both sides of the equation with (X’X)-1 – the inverse of X’X:

(X’X)-1X’Y = (X’X)-1(X’X)b

5. Any matrix multiplied by its inverse is the identity matrix I:

(X’X)-1X’Y = Ib

6. Ib = b = (X’X)-1X’Y

[Note: the above equation contains the Moore-Penrose pseudoinverse matrix which ensures that inverses take place on SQUARE matrices. A pseudo-1=(A’A)-1 *A’

To solve equation Y = Xb, the naive solution would be to multiply both sides by X-1

X-1Y = X-1Xb, and thus

X-1Y = bHowever, recall that matrix inverses are only defined on Square matrices. We cannot guarantee that the matrix of independent variables will be a square matrix..in fact, it never is! the sample size needs to be reasonable to ensure correct results. The use of a pseudoinverse matrix solves this problem nicely.]

Example:

A typical workflow involving regression analysis goes like this:

- Formulate a hypothesis about the relationship between variables of interest

- Gather data from a representative sample

- Compute regression parameters and prove (or disprove) the hypothesis

A common example of multiple regression analysis is in college Student Admissions. The admissions committee bases its decision on numerous factors that it believes will influence the students GPA.

For example, a college could hypothesize that the GPA that will be attained by the admitted student is dependent on his/her Highschool GPA, SAT score (V+Q) and Letters of recommendation (The strength of recommendation letters will of course need to be quantified)

A sample of students currently attending the college is then surveyed for their current college GPA, high school GPA, SAT scores and Recommendation letter strength.

The predictor equation for college GPA (dependent variable) is :

Y’ = a+b1X1+b2X2+b3X3

where Y’ = Predicted GPA

{b1, b2, b3} = Regression coefficients

a = Intercept

X1 = HS GPA, X2 = SAT Score and X3 = Strength of Recommendation letters

So, once the coefficients and intercept are determined, it is quite easy to plug in values for X1, X2, X3 and “predict” the future GPA of a student.

Implementation:

Although there are numerical statistical packages that can easily compute regression parameters, they are all geared towards “offline” computation. That is, data is collected first, and then the numbers are crunched to arrive at the result. I was recently involved in a project that required online regression arithmetic – i.e., display the relevant regression parameters at the end of an online survey. I decided to write my own PHP library for this purpose (complete source code is attached at the end of this blog).

Each observation can be written as an equation as follows:

For explanatory purposes, consider a simple linear regression. In matrix terms, the equation looks like:

We can then solve for {b0,b1}… Note how the X matrix needs to be filled with 1’s in the first column. The exact same approach works for multiple regression too – We just use larger matrices!

‘n’ is the sample size (number of observations)

Please refer to the word doc attached in the download for a detailed derivation on why solving the above matrix equations gives us the sum of least square errors.

The various formulae used are:

- b = (X’X)-1X’Y (Regression coefficients. This is an nX1 array)

- SSR = b’X’Y – (1/n) (Y’UU’Y) (sum of squares due to regression – this is a scalar. U is a unit vector of dimensions nX1)

- SSE = Y’Y-b’X’Y (Sum of squares due to errors – scalar)

- SSTO = SSR+SSE (Total sum of squares – scalar)

- dfTotal = sample_size – 1 (Total degrees of freedom)

- dfModel = num_independent – 1 (Model degrees of freedom)

- dfResidual = dfTotal – dfModel (Residual degrees of freedom)

- MSE = SSE/dfResidual (Mean square error – scalar)

- SE = (X’X)-1*(MSE) then take Square Root of elements on the diagonal

- t-stat = b[i][j]/SE[i][j]

- R2= SSR/SSTO

- F = (SSR/dfModel)/(SSE/dfResidual)

The heart of the library is the Lib_Matrix class. It handles all required matrix manipulation operations. A fluent interface has been provided where possible to make code more intuitive and readable. The Regression computation is performed in the “Lib_Regression” class. I have also attached the complete Unit test suite (100% code coverage) to help you better understand the API.

| File Name | Purpose |

| Matrix.php | Core Matrix Manipulation |

| Regression.php | Compute various regression parameters |

| MatrixTest.php | Complete unit test suite for both matrix and regression classes |

| Excel Regression.xlsx | Regression performed on the test case testRegressionPassingArrays() using MSexcel |

| MyReg.csv | Sample CSV file used by function testRegressionUsingCSV() |

An example API use scenario is described below:

//independent variables.

$x = array(

array(1, 8, 2),

array(1, 40.5, 24.5),

array(1, 4.5, .5),

array(1, .5, 2),

array(1, 4.5, 4.5),

array(1, 7, 8),

array(1, 24.5, 40.5),

array(1, 4.5, 2),

array(1, 32, 24.5),

array(1, .5, 4.5),

);

//dependent variables

$y = array(array(4.5),

array(22.5),

array(2),

array(.5),

array(18),

array(2),

array(32),

array(4.5),

array(40.5),

array(2));

$reg = new Lib_Regression();

$reg->setX($x);

$reg->setY($y);

//NOTE: passing true to the compute method generates standardized coefficients

$reg->Compute(); //go!

var_dump($reg->getSSE());

var_dump($reg->getSSR());

var_dump($reg->getSSTO());

var_dump($reg->getRSQUARE());

var_dump($reg->getF());

var_dump($reg->getRSQUAREPValue());

var_dump($reg->getCoefficients());

var_dump($reg->getStandardError());

var_dump($reg->getTStats());

var_dump($reg->getPValues());

Click here to download the regression library(Github)

References:

http://marketing.byu.edu/htmlpages/books/pcmds/REGRESS.html

http://davidmlane.com/hyperstat/prediction.html

http://luna.cas.usf.edu/~mbrannic/files/regression/regma.htm

http://www.geog.ucsb.edu/~joel/g210_w07/lecture_notes/lect09/oh07_09_2.html

http://home.ubalt.edu/ntsbarsh/Business-stat/otherapplets/pvalues.htm#rtdist

This is absolutely fantastic. Can’t wait to invert some matrices!

Hi,

This is incredible! Do you have any plans to write a logistic regression, too? Please let me know!

You helped me a lot with your library! This is exactly what I looked for. Thank you!

When implementing within my application, found a tiny bug though – it does not calculate regression in case of only one independent variable and constant 0 model. Short investigation showed that there is a problem with the Inverse() function in the Matrix class. Here’s a quick fix for that. You should consider including this in the source code, cause you did pretty good job here and it can help a lot of people 🙂

function Inverse()

{

if (!$this->isSquareMatrix())

throw new Exception(“Not a square matrix!”);

$rows = $this->rows;

$columns = $this->columns;

$newMatrix = array();

if ($rows==1 && $columns==1) {

$newMatrix[0][0] = 1;

$invertedMatrix = new Lib_Matrix($newMatrix);

$invertedMatrix = $invertedMatrix->ScalarDivide($this->GetElementAt(0,0));

}

else {

for ($i = 0; $i < $rows; $i++)

{

for ($j = 0; $j GetSubMatrix($i, $j);

if (fmod($i + $j, 2) == 0)

{

$newMatrix[$i][$j] = ($subMatrix->Determinant());

} else

{

$newMatrix[$i][$j] = -($subMatrix->Determinant());

}

}

}

$cofactorMatrix = new Lib_Matrix($newMatrix);

$invertedMatrix = $cofactorMatrix->Transpose()->ScalarDivide($this->Determinant());

}

return $invertedMatrix;

}

This works EXCELLENT for up to 9 variables. Once you hit 10, it jumps into an endless computation loop and never returns values. Any reason behind this? 8, even 9 works stellar. I am referring to the size of the independent variable arrays.

I added a function to this library that I use a lot. The user supplies an optional array of x values, and the regression is used to provide predicted y values. The function also supplies an array of residuals.

/*********

* Use the regression parameters to calculate the predicted f(x)

* The $x matrix must have a column of 1’s

*/

public function forcast($x=$this->x){

$mx = new Lib_Matrix($x);

$coeff_mat = array();

foreach ($this->coefficients as $value){

$coeff_mat[] = array ($value);

}

//print_r($this->coefficients);

$coeff = new Lib_Matrix($coeff_mat);

$forcast_mat = $mx->Multiply($coeff);

$x_mat = new Lib_Matrix($x);

$xNoOnes = $x_mat->GetSubMatrix(count($this->x) + 1, 0);

$residual_mat= $xNoOnes->Subtract($forcast_mat);

$this->residulas = $residual_mat->getInnerArray();

$this->forcast = $forcast_mat->getInnerArray();

$this->pred_int = 1;

return $this->forcast;

}

I added a function to this library that I use a lot. The user supplies an optional array of x values, and the regression is used to provide predicted y values. The function also supplies an array of residuals.

/*********

* Use the regression parameters to calculate the predicted f(x)

* The $x matrix must have a column of 1’s

*/

public function forcast($x=$this->x){

$mx = new Lib_Matrix($x);

$coeff_mat = array();

foreach ($this->coefficients as $value){

$coeff_mat[] = array ($value);

}

//print_r($this->coefficients);

$coeff = new Lib_Matrix($coeff_mat);

$forcast_mat = $mx->Multiply($coeff);

$x_mat = new Lib_Matrix($x);

$xNoOnes = $x_mat->GetSubMatrix(count($this->x) + 1, 0);

$residual_mat= $xNoOnes->Subtract($forcast_mat);

$this->residulas = $residual_mat->getInnerArray();

$this->forcast = $forcast_mat->getInnerArray();

$this->pred_int = 1;

return $this->forcast;

}

If you want confidence intervals and prediction intervals from your regression, you can use the following. It is necessary to evaluate a Student T inverse, so I’ve attached some code to perform that too in lieu of the PECL math_stat library:

/************

* Caclulate the confidence interval and prediction interval

* of the regression at the points in $x. The result is an array of arrays. Each

* row contains the confidence interval in index 0 and the

* prdiction interval in index 1.

*/

public function intervals($x = false, $p=.05){

$this->count = count($this->x);

if(!$x){

$mx = new Lib_Matrix($this->x);

$xNoOnes = $mx->GetSubMatrix($this->count + 1, 0);

$x = $xNoOnes->getInnerArray();

}

$sumx = 0;

$sumy = 0;

for ($i=0;$icount;$i++){

$sumy += $this->y[$i][0];

$sumx += $this->x[$i][1];

}

$this->yave = $sumy / $this->count;

$this->xave = $sumx / $this->count;

$this->devysq = 0;

$this->devxsq = 0;

$devxy = 0;

for($i=0;$icount;$i++){

$devy = $this->y[$i][0] – $this->yave;

$this->devysq += pow($devy, 2);

$devx = $this->x[$i][1] – $this->xave;

$this->devxsq += pow($devx, 2);

$devxy += $devy*$devx;

}

$this->devxysq = pow($devxy,2);

$this->STEyx = sqrt(1/($this->count-2)*($this->devysq-$this->devxysq/$this->devxsq));

$t = students_t_inv_2t($p, $this->count – 2);

foreach ($x as $value){

$inner = 1/$this->count+pow($value[0]-$this->xave,2)/$this->devxsq;

$this->intervals[] = array($t*$this->STEyx*sqrt($inner),$t*$this->STEyx*sqrt(1+$inner));

}

return $this->intervals;

}

/***************

* See http://www.dtic.mil/dtic/tr/fulltext/u2/642495.pdf

*/

function regularized_incomplete_beta($x,$a,$b){

if(is_int($a)){

//Equation 50 from paper

$sum = 0;

for ($i=1;$i<=$a;$i++){

$sum += pow($x,$i-1)*gamma($b+$i-1)/gamma($b)/gamma($i);

}

return 1-pow((1-$x),$b)*$sum;

}

else if ($b == .5){

if ($a == .5){

//Equation 61 from paper

return 2/pi()*atan(sqrt($x/(1-$x)));

}

//Equation 60a from paper

$k = $a + .5;

$sum = 0;

for ($i=1;$i<=$k-1; $i++){

$sum += pow($x,$i-1)*gamma($i)/gamma($i+.5)/gamma(.5);

}

return regularized_incomplete_beta($x, .5, .5) – sqrt($x-$x*$x)*$sum;

}

else{

//Equation 59 from paper

$sum = 0;

$j = $b + .5;

for($i=1;$i= 2

for ($i = 2; $i <= $in; $i++) {

$out *= $i;

}

return $out;

}

/**********

* Calculate the students t value at a point

*/

function students_t($x,$v){

if (is_int($v)){

return gamma(($v+1)/2)/sqrt($v*pi())/gamma($v/2)*pow(1+pow($x,2)/$v,-1*($v+1)/2);

}

return false;

}

/***********

* Calculate the cumulative t value up to a point, left tail

*

*/

function students_t_cum($t, $v){

if(!is_int($v)) return false;

$x = $v/($t*$t+$v);

$a = $v/2;

$b=.5;

return 1-.5*regularized_incomplete_beta($x,$a,$b);

}

/************

* Calculate the area of the two tails of students t

*/

function students_t_2t($t, $df){

return 2*(1-students_t_cum($t, $df));

}

function students_t_inv($p, $df){

$Ixv2half = 2-2*$p;

//echo "dif Ix(v/2,.5): " . $Ixv2half . "”;

$x_guess = .75;

$limit = .00000000001;

$dx = 1;

if (($df % 2) == 1){

$k = $df/2 + .5;

while ($dx > $limit){

$Is_sq = pow($Ixv2half – regularized_incomplete_beta($x_guess, .5, .5),2);

//echo “dif Is²: ” . $Is_sq . “”;

//Equation 60a from paper

$sum = 0;

for ($i=1;$i<=$k-1; $i++){

$sum += pow($x_guess,$i-1)*gamma($i)/gamma($i+.5)/gamma(.5);

}

//echo "sum: " . $sum . "”;

$a = 1;

$b = -1;

$c = $Is_sq/$sum/$sum;

//echo “c: ” . $c . “”;

$x_new = (-1*$b+sqrt($b*$b-4*$a*$c))/2/$a; //quadratic formula

$dx = abs($x_new – $x_guess);

$x_guess = $x_new;

//echo $x_guess.””;

}

}

else{

while ($dx > $limit){

$sum = 0;

for ($i=1;$i<=$df/2;$i++){

$sum += pow($x_guess,$i-1)*gamma(.5+$i-1)/gamma(.5)/gamma($i);

}

//echo "sum: " . $sum . "”;

$x_new = 1-pow((1-$Ixv2half)/$sum,2);

$dx = abs($x_new – $x_guess);

$x_guess = $x_new;

//echo $x_guess.””;

}

}

$t = sqrt(($df – $df*$x_guess)/$x_guess);

//echo $t;

return $t;

}

/******************

* Calculate the inverse of the two tailed t CDF

*/

function students_t_inv_2t($p, $df){

return students_t_inv(1-$p/2, $df);

}

Last Update. Attached is a function to do some simple outlier analysis within this class. This will calculate Cook’s D and DFFITS on each point in the regression.

/*********************

* Perform some routine outlier tests

* The array contains:

* 0:Cook’s D

* 1:DFFITS

* Note: both equations are set for regression of the form y=mx+b

* The “count-3” probably needs to be adjusted for higher

* order regressions.

*/

public function outliers(){

$mx = new Lib_Matrix($this->x);

$mhat = $mx->Multiply($mx->Transpose()->Multiply($mx)->Inverse()->Multiply($mx->Transpose()));

foreach($this->residuals as $i=>$value){

$leverage = $mhat->GetElementAt($i, $i);

$this->outliers[$i][0] = $value[0] * $value[0] * $leverage/$this->SSEScalar/2/pow(1-$leverage, 2);

$MSei = ($this->SSEScalar * (1-$leverage) – $value[0] * $value[0]) / (1-$leverage) / ($this->count – 3);

$rstudent = $value[0] / sqrt($MSei*(1-$leverage));

$this->outliers[$i][1] = $rstudent * sqrt($leverage/(1-$leverage));

}

return $this->outliers;

}

i just want to say thank you very much

this is absolutely amazing…

Thanks for that great class. During my time when studying I created something similar with C++ Builder for DAX prognose. Now I am very happy that there is an PHP class which I will need now. I will do some experiments on the stock market 🙂